Machine Learning Models

Through university-led projects, I have worked with a variety of machine learning models applied across different use cases, ranging from predicting real estate prices to reconstructing images with deep learning techniques. These projects gave me exposure to both classical and advanced models, while also allowing me to assess performance using different evaluation metrics to balance accuracy, complexity, and interpretability. To present my work, I produced a formal report adhering to academic literature standards and developed a poster designed to communicate the core ideas to a general audience.

Convolutional Autoencoders (CAE)

Decision Tree regressor VS Linear Regression

DTR - DECISION TREE REGRESSOR

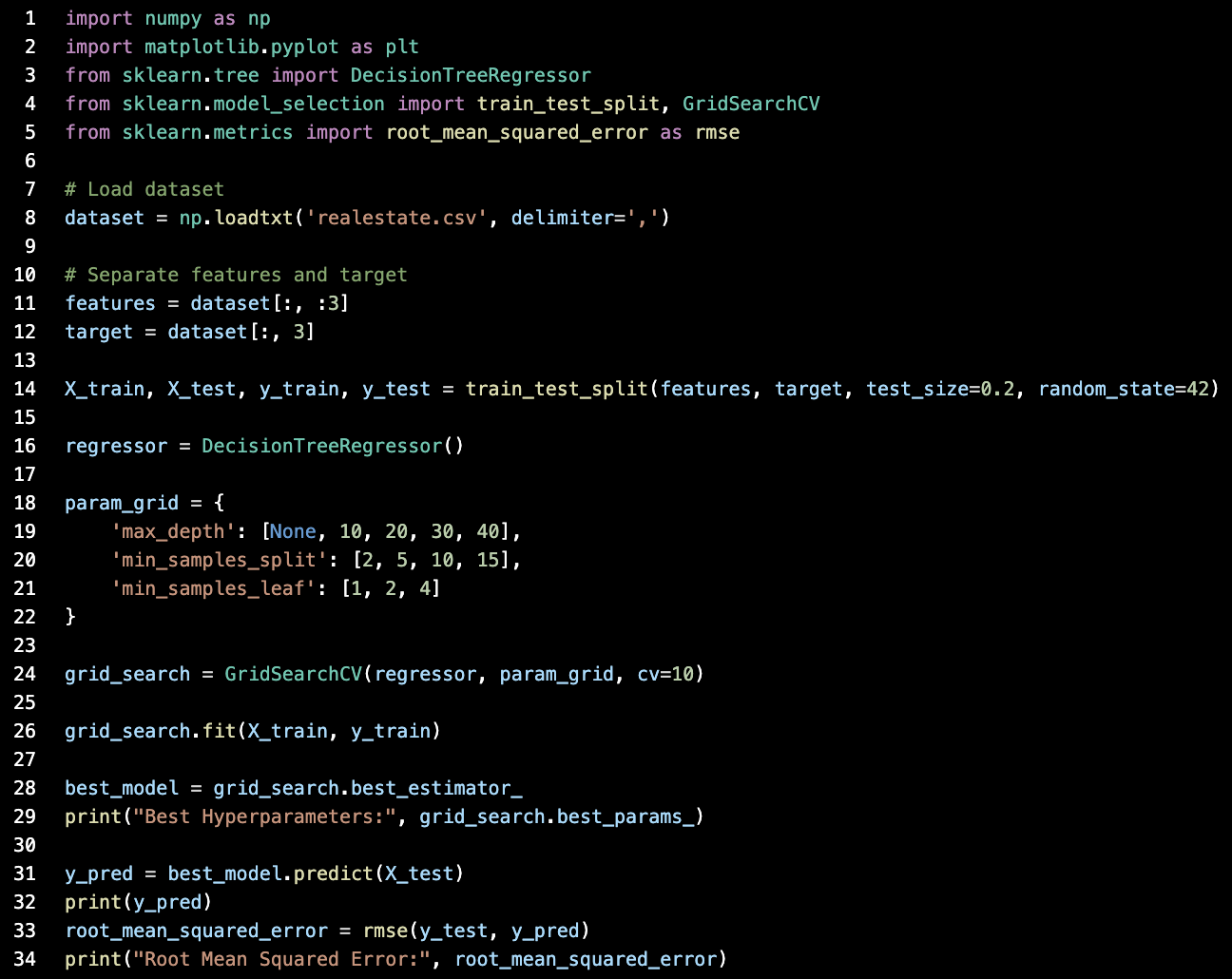

The Decision Tree Regressor (DTR) was implemented to capture non-linear patterns in predicting real estate prices. By recursively splitting the dataset based on feature thresholds, the model was able to uncover complex relationships between inputs such as house age, distance to transport links, and nearby amenities. The implementation included data preprocessing, train-test splits, and hyperparameter tuning of parameters like maximum depth, minimum samples per split, and minimum samples per leaf. This helped prevent overfitting while retaining the model’s ability to highlight feature importance. Performance was measured using Root Mean Squared Error (RMSE), which provided a benchmark for evaluating predictive accuracy on unseen data.

Project Overview

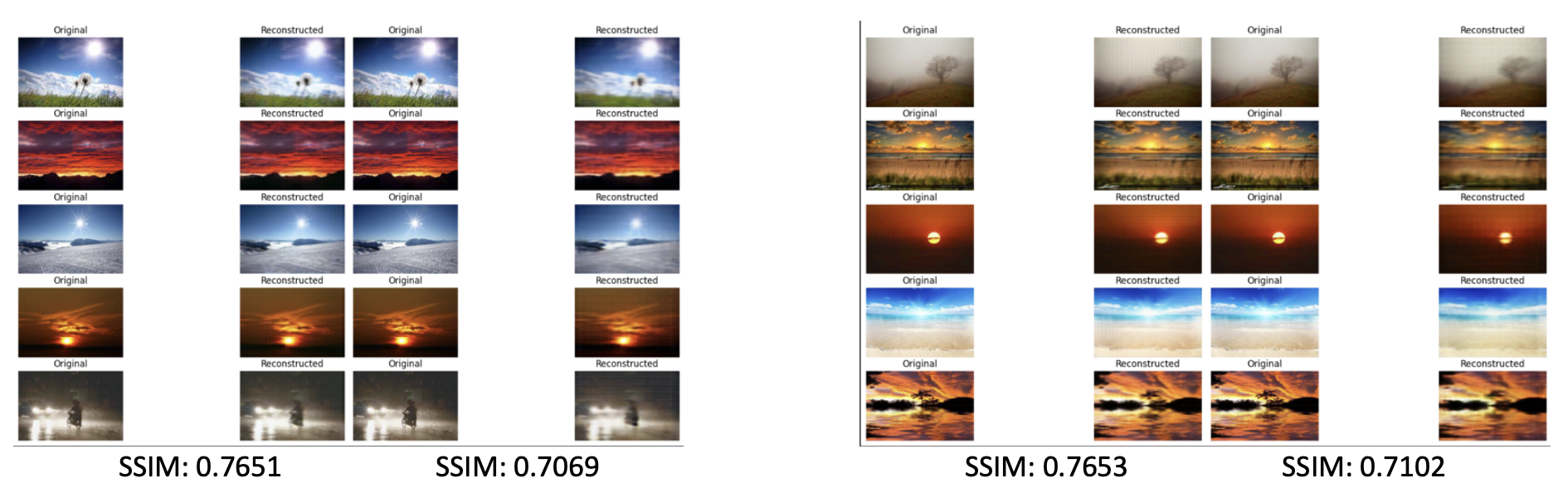

This project focused on implementing convolutional autoencoders (CAEs) for lossy image compression, balancing efficiency with reconstruction quality. The model architecture incorporated convolutional layers for feature extraction, a bottleneck representation for compression, and transpose convolutions for decoding. Key optimisations included strided convolutions in place of pooling, batch normalization for stable convergence, and LeakyReLU activations to improve gradient flow. Data preprocessing involved normalization, channel reordering, and tensor conversion for PyTorch compatibility, with hyperparameters fine-tuned through grid search to identify an optimal learning rate of 0.001 and a batch size of 64.

Performance was assessed using both Mean Squared Error (MSE) and the Structural Similarity Index Measure (SSIM) to capture perceptual accuracy. Experiments showed that deeper networks and longer training improved image fidelity, with SSIM scores reaching ~0.81. The project was formally documented through a scientific report, structured to academic standards, which detailed the methodology, experiments, results, and key trade-offs while also highlighting future improvements such as integrating SSIM into the loss function to further enhance reconstruction quality.

LINEAR REGRESSION

The Linear Regression model served as a simpler, more interpretable baseline, assuming a linear relationship between features and house prices. Its implementation included data preparation, coefficient estimation, and residual analysis to assess fit quality. While linear regression struggled with non-linear trends, it provided lower variance and offered transparency by directly showing the impact of each feature through its coefficients. Accuracy was again assessed using RMSE, allowing a clear comparison against the more flexible decision tree model. Together, the two implementations demonstrated the trade-off between interpretability and complexity in predictive modelling.